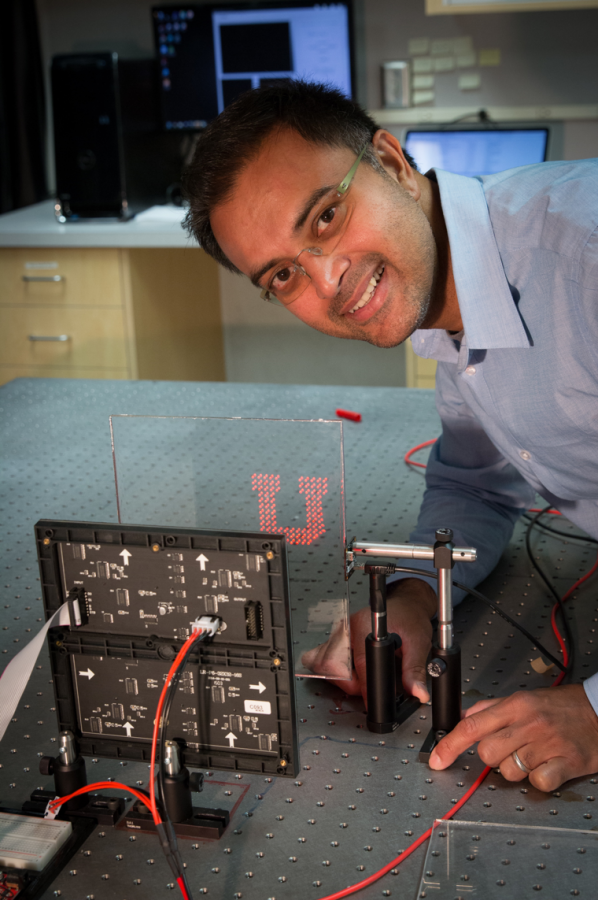

What if you could develop a camera whose images could be interpreted by a computer running an algorithm instead of by humans? This is the question University of Utah electrical and computer engineering associate professor Rajesh Menon raises. Menon and his team of researchers have created a concept that uses an existing algorithm, see-through objects and a camera sensor that could completely do away with camera lenses. The finding was detailed in Computational Imaging Enables a ‘See-Through’ Lensless Camera, a research paper by Menon and Ganghun Kim, a co-author engineering grad.

“Lenses are very human-centric because that’s the way our eyes have evolved to see,” Menon said, “but that’s not the only way to see the world.”

For a centuries, images taken by cameras have been deciphered by humans and optic lenses. “We have evolved to see the world in a specific way and our cameras basically reflect that, but that’s a very restrictive way to think about it,” Menon said. “If you have a machine, why restrict yourself to human capability?” An image is decoded with a camera sensor that is not directly pointed at an object. “We put the image sensor pointing into the window,” said Menon, “And what it showed is, if you properly engineered the system, the image sensor not looking outside but into the window, and can actually see what’s outside.” After using an existing algorithm to decode the image, the computer reveals an image similar to the one set in front of the glass.

“If you took the lens off your camera and took a picture, the image would look like a cloudy digital blob,” said Vincent Horiuchi, public relations associate for the U’s College of Engineering. “But within that cloudy digital blob is enough information of the object you took a picture of.” Menon and his research team trained an algorithm to know what to look for. “In other words, if you want to shoot a tree, then you train the algorithm to know when to look for a tree,” Horiuchi said. “When it shoots the tree, the algorithm takes that information from that cloudy digital blob and recreates the tree.”

The development that Menon and his team found could be a game-changer in the world of surveillance, augmented reality (AR) glasses and smart cars. “There are many applications,” said Menon. “Your camera now becomes much lighter and [the development is] cheaper, thinner and easier to implicate with other things like windows or glass. Another interesting application could be smart glasses. Things like Magic Leap or Microsoft HoloLens could use this technology.”

AR glasses are a technology that simply adds a virtual element to a live feed coming the user’s atmosphere through a pair of glasses. Pokémon GO is an example of AR, albeit on your mobile device, as it’s user-centric. VR, however, places the user in a fully artificial virtual atmosphere with no view of their current surroundings, such as watching YouTube 360 videos or gaming consoles with VR goggles.

Whether it’s AR or VR, the International Data Corporation predicts spending on both products will total at 215 billion dollars by the year 2021. The U.S took the lead in AR/VR spending in 2017 at 3.2 billion, beating out Japan at three billion in that same year.